On a brain-computer interface, Facebook clears the first hurdle

Facebook issued an update on its work on developing a brain-computer interface with researchers at the University of California at San Francisco (UCSF) (BCI).

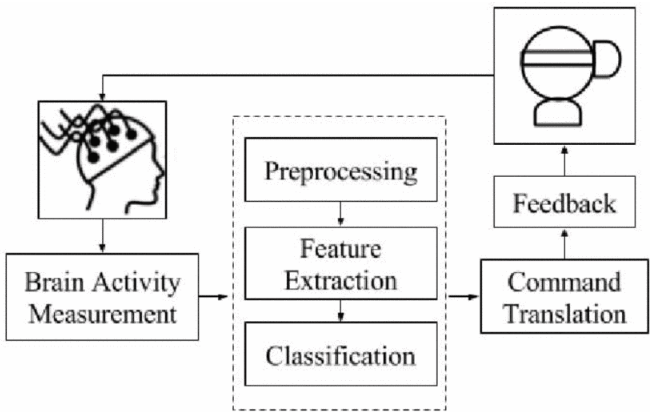

The BCI will translate your brain waves into commands that linked devices could interpret. I could use it without verbal commands or touch to monitor digital devices such as virtual reality (VR) and augmented reality (AR) equipment, and others. This update comes after they announced its plans in 2017. Since then, two years have passed, and Facebook FB -1.31% demonstrated just how far it built the mind-reading apparatus.

The post states that they are still behind the first hurdle: researchers have noticed that brain waves can actually decode. As shown in an article published in the journal Nature Communications, UCSF scientists could guess only by monitoring data coming from electrodes implanted on the surface of their brains what word participants uttered. In an interactive, conversational environment, these findings, researchers add, "demonstrate real-time decoding of speech, which has important implications for patients who can not communicate."

Aid for paralysed citizens

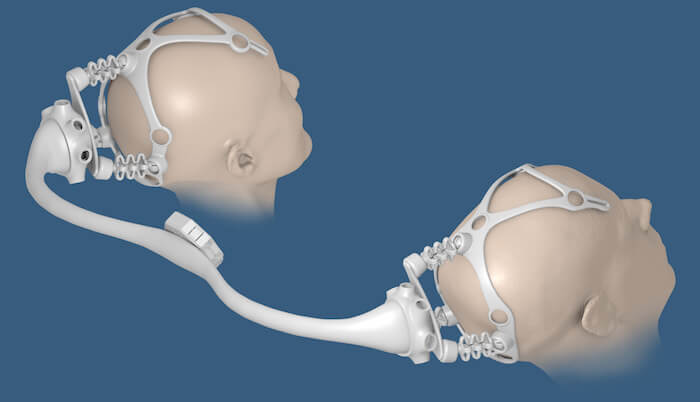

There is no consumer-market application for the aim of the research; it's all about giving paralyzed people an ability to connect better with the world around them. Researchers are in the early stages, with plenty of space for progress. For instance, the decoding success rate was 61 percent, and vocabulary used during testing severely restricted. The error rate should be a maximum of 17 percent, with a real-time decoding speed of about 100 words per minute, to be functional in a system intended for mainstream consumers. Facebook requires a 1,000-word vocabulary for the device and no brain surgery needed.

The BCI that Facebook envisions would not need to directly connected to your brain. Instead, in a secure, non-invasive way, it can get the data it needs by using fiber optics or lasers to detect oxygen saturation in the brain from outside the body. The gadget would function just like the pulse oximeter, the clip-like sensor you've probably connected at the doctor's office to your index finger. Scientists say it could be 10 years or fewer before their vision comes to fruition with Facebook's support and a team of their own on the job.

The aim here is not to build a computer that will eliminate verbal communication; terms that signify commands such as "home," "select," "delete,". should be able to effectively decoded, which will speed up the way users communicate with modern VR and AR systems.

Glasses AR,

Speaking of AR, Facebook sees AR glasses as the ideal candidate for BCI implementation: "AR's promise lies in its ability to connect individuals to the world around them and to each other seamlessly." We can maintain eye contact and retrieve valuable information and background rather than staring down at a phone screen or breaking out a laptop without ever missing a beat.

Would you like the likes of Facebook to "open your mind"? The social networking business is already aware of your spending habits, interest problems, rants, messages, and now it can gain access to your innermost thoughts. The data it could collect would be at a rudimentary level at first, but this, to would develop. Knowing Facebook, in return for benefit, it is perfectly fair to think it will use and misuse the data.

For those who really need it, the brain-computer interface as a piece of technology seems ground-breaking and highly beneficial. But before the devices reach the market, additional ground rules need to be made for consumer-level use. The human mind is one of the few remaining sanctuaries of freethinking, and it is truly a terrifying prospect to have it tapped for the sake of convenience, one that may very well lead to Orwellian dystopia.

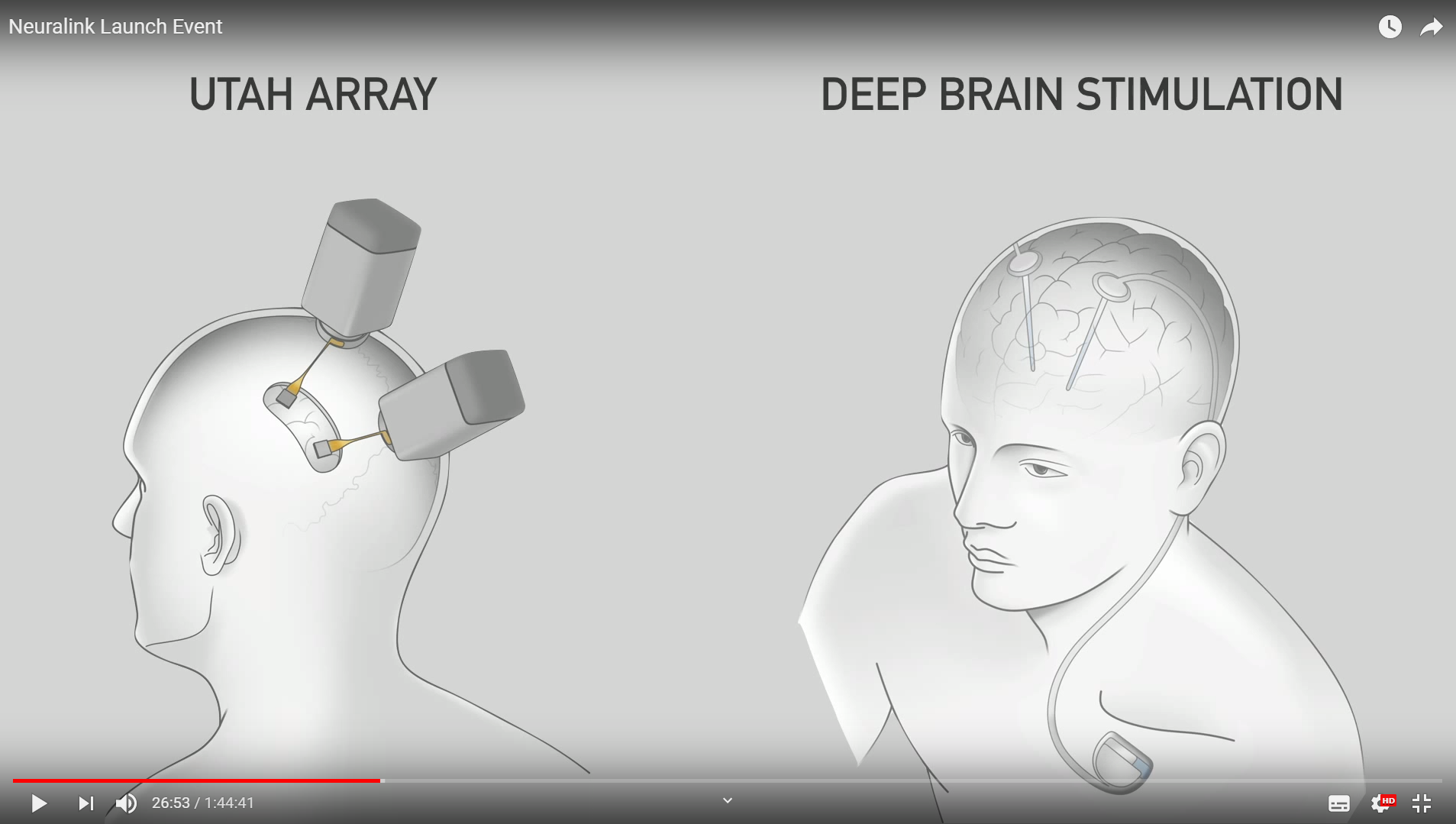

I'm optimistic about the technology, and there's no question in my mind that if Facebook fails, others might "get it right," such as Elon Musk's Neuralink. I'm also highly concerned, however, as there are no assurances that this technology would not exploited, if not by corporations, then by government agencies vying for information and more ways to monitor their subjects.

What you need to know about Virtual and Augmented Reality Interfaces for Brain Computers

I've been curious about Brain Machine Interfaces for a long time: I think they're the future of interactions with human computers and I'd love to play with them, particularly in combination with the technologies I love most: virtual reality and augmented reality. Neurable's recent showcase at SIGGRAPH 2017 increased this curiosity of mine, so I figured I should document a lot on the subject to write an outstanding article about it.

I actually got a little depressed while recording, because I realized that Wait But Why's Tim Urban has already written an amazing 38,000-words-article on the subject and so there is no way for me to beat that epicity. That's all right: as the Chinese people say, x.

So, I highly suggest that you read that article (actually, it's going to take you hours, but it's going to give you a full introduction to BCI from all sides: how the brain functions, what kind of BCIs we have, what kind of BCIs we're going to have in the future, etc...). And I'm going to write mine now: I'll be able to even write a decent article on the subject, highlighting the uses of XR technologies.

Ok, what's a BCI?

BCI stands for Brain-Computer Interface; Brain Machine Interface is a BMI synonym. The meaning is exactly the same: an interface that, like a computer, enables the human brain to interact with an external device.

Imagine that your mind could read by a computer, so that if you think of "Open Microsoft Word," the computer would actually open Word without even touching your keyboard or mouse. That would have been pretty cool. For a brain machine interface, this might happen: if you have a system inside your brain that reads what you think and communicates it to your computer, you can only connect with your thoughts with your computer and use your hands only to break the keyboard when Word crashes and loses your last 4 hours of hard work.

In PC, this does not just apply to computers, but to any system, such as smartphones (you could speak to your mind with Siri), cars (you could start the engine just by thinking about it),. It's a pretty cool technology and there are many businesses trying to make this magic happen.

You can think of the Matrix if you want a popular movie example: the plug in your head is a BCI that you can use to access The Matrix's virtual world.

For AR and VR, why is this important?

Since we have to maximize the full potential of our brain in order to exploit the full potential of XR.

Let's think about virtual reality: we're only able to decently simulate 2-3 senses at the moment: vision, audio, and touch. And I say decently, because we're far from perfect emulation: the highest possible resolution commercial VR unit, the Pimax 8K, is far from achieving human vision efficiency (near to 16K per eye). The remaining two senses, smell and taste, are just fields for tests, as I've already written about in my dedicated papers, but we are really in the early stages. It is also difficult to replicate a lot of other sensations: we don't have a way to pass hot or pain to the VR user, for example. In addition, VR suffers from the problem of motion sickness and finding a suitable form of locomotion.

As in Sword Art Online, many people dream of entering the world of total dive immersion, but this is unlikely with current technology. And even in the future, recreating a full immersion only by using sensors and actuators on our body could be problematic: we will need a haptic suit to have haptics on our entire body, but wearing it is uncomfortable. We will need to have sensors in our nose and mouth to learn and smell and taste. We will have to wear sensors in every single part of our body to have a full VR... This is unworkable.

The perfect solution for achieving a total immersion would be to interface directly with the brain: instead of stimulating all parts of the body to produce all sensations, stimulating only one, our brain, would be more successful. For eg, if we are in a game inside the Sahara Desert, we might tell the brain to feel hot and so we would feel our entire body feeling hot and sweating as if we were really there. We will have a full immersion into virtual reality if we do this, as in The Matrix... a little frightening, but also the dream that all enthusiasts have (apart from the "machines that take control of the world" part of the story).

In the short term, being a little less visionary and more realistic, reading the brain will be very important in VR psychological applications: the BCI could sense the user's stress levels and tailor the experience to them while treating a specific phobia within VR. Getting a BCI will solve one of the VR interfaces' biggest problems: input. It's just a poor experience to type on a virtual keyboard... entering words just assuming that they will be a big move forward for the XR user experience. And, of course, when he/she sees a specific object, there are marketing scenarios to detect the user's involvement.

Why are AR and VR so important? As we must exploit the full potential of XR, we must take full advantage of our brain potential.

Let's think about virtual reality: we can replicate only two or three senses decently at the moment: vision, audio, and touch. And I say decently, though we are far from the ideal emulation: the commercial VR system with the greatest possible resolution, the Pimax 8K, is far from achieving human vision output (near to 16K per eye). The other two senses, smell and taste, as I have spoken in my particular papers, are just experiment areas, but really we are in the early stages. It is also difficult to replicate many other sensations: we do not have a way to relay hot or pain instance to the VR user. In addition, VR suffers from motion disease and finding an effective form of locomotion.

Emulation of the taste of virtual reality

The Lollipop Digital Taste. This is the best device to simulate taste in VR right now. It seems to me pretty rough (Image by Nimesha Ranasinghe)

Many people dream of joining the entire world of diving as in Sword Art Online, but this is unlikely with current technology. Even in the future, it's difficult to re-create a full immersion using only sensors and actuators on our body: we need a haptic suit to keep a haptic on all our bodies, but it's difficult to wear. In order to try smell and taste, we need equipment in our mouth and nose. We will have to wear sensors in every part of our body to have a full VR... this is impractical.

The best solution would be directly connected into the brain: instead of stimulating all parts of the body to make any sensation, only one, that is our brain, can stimulated more effectively. For eg, when we are in a game in the Sahara desert, we can tell the brain to be hot, so we feel the whole flesh feeling heat and sweat as if we were actually there. If we succeed, we will completely immerse ourself in the virtual reality, as in The Matrix... a little frightened but also the dream we all enthusiasts have (apart from the story's "machines taking control of the world").

Being a little less visionary and more tangible in the short term, brain readings will be very important in VR's psychological applications: the BCI could identify and adjust the user's stress levels when coping with a specific phobia within VR. A BCI will solve one of the major problems of VR interfaces: input. Entering words just assuming they will be a positive move forward for XR user experience is really a bad thing. Then there are, of course, the marketing scenarios to detect the user's commitment when he or she sees a commodity.

V Dashboard Virtual Reality

The V dashboard app keyboard. It's very difficult to type with the VR controllers.

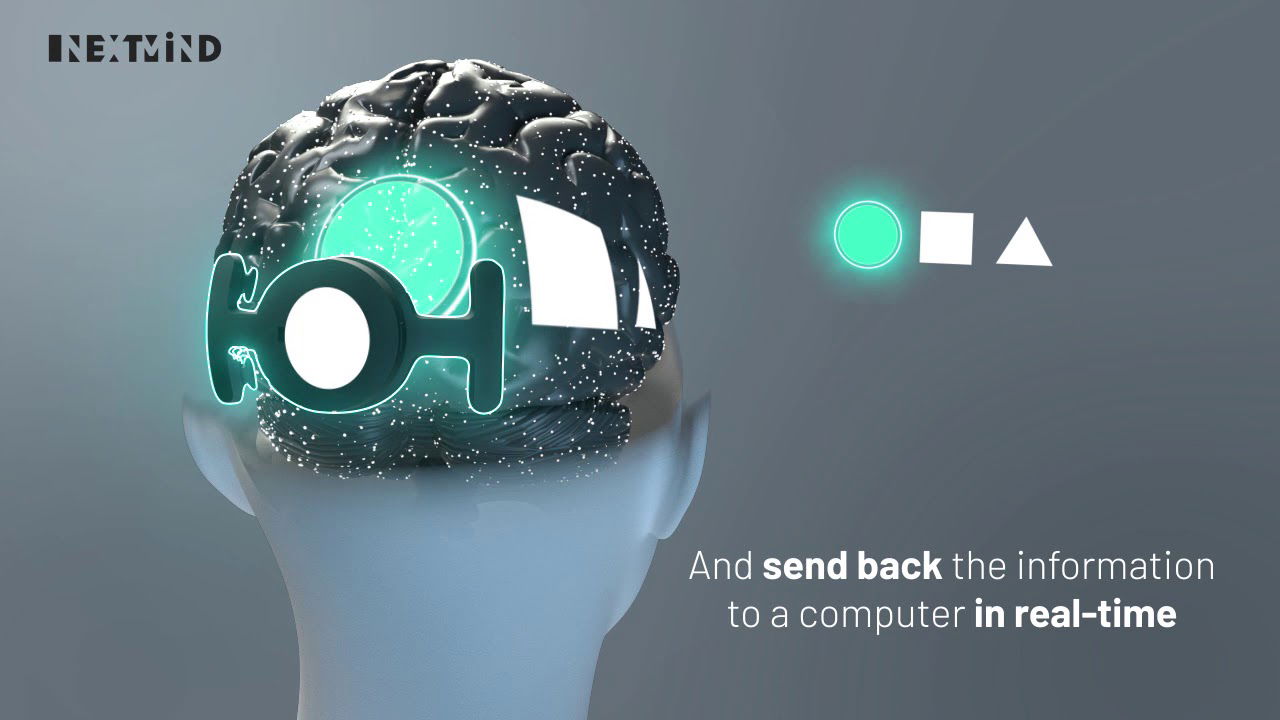

AR has similar considerations. But there's a step further: if we're going to wear AR all day in the future, getting an interface that avoids moving our hand constantly to the air to tap the air (as with the HoloLens) will make AR experience a lot more convenient. Further, there may be an AI analysis that analyzes what we see through our glasses and this AI might linked to the brain and constantly evaluate what we think and use these data to give us contextual suggestions (i.e. I'm hungry + a bakery shop that makes great croissants is next to me. This might allow a great deal of increased truth.

Brain's increasing Virtual Reality Interface

If I now have a brain machine interface linked to me, I'd like to eat this croissant breakfast!

If we have clear contact with the brain, we can make incredible stuff with XR technology... so why aren't we? There are many explanations... one of which is that we have to know our brain well.

How much are we conscious of the brain?

Wait But why give us an in-depth account of how much our brain knows:

Brain PC Interface VR

Accurate representation of our brain information (Image by Wait But Why)

Our brain is one of our body's most complex organs, and we know it the least. Quoting the post of Tim:

Jeff Lichtman, another professor, is much tougher. He begins his classes by asking his students: "If everything that you need to know about the brain is a mile away, how far did we go? "He says that students offer responses, like 3/4 miles, 5/5 miles, 1/5 miles etc.—but he believes the proper reply to be "about 3 inches."

There are different explanations for this. One of the most interesting is that we use the brain itself to study the brain.

The neuroscientist Moran Cerf, a third professor, told me an old neuroscience saying why attempting to master the brain is a bit of a catch-22: "If the human brain were so simple that we could understand it, we would be so simple that we wouldn't."

So if the brain is easy to understand, we have clear comprehension, so that we can't understand it, anyway. That's fascinating.

What vision is there for the fortress we've shown, we'll have EEG devices in the short term in the future that will allow us to communicate with AR/VR interactions by only using our brain. Using eye tracking, brainwaves and AI, without using our hands, we will able to pick and click objects; we will able to send simple commands and maybe even type words without messing with controllers. Advertisers like Google and Facebook will use our emotion recognition to understand advertising interaction (and this is scary), but it will also be incredible for social interactions (e.g. detecting if a person is feeling harassed can help him or her to play safely in the social environment).

There could be a transition from this strategy eventually, and we're going to have to use chips within our brains. It's scary, I know, that's why disabled people will use these devices at the beginning, that they will want to play with everything possible to be well again. That's why both Neuralink and Kernel first target this type of individual and seek to support individuals with brain cancer paralysis or any problem. As I have already said, most BCI technology out there that requires something implanted inside the body currently used on people with disabilities (blind or deaf, for instance).

If we can build a decent implanted BCI that will have no risk to the patient, the technology will become more popular and target healthy individuals. Innovators and affluent people would first try it out (as it is happening with every innovation, like AR and VR themselves). The technology will then become cheaper and safer and will become widespread: all will use naturally it. There would be social opposition at the beginning, as with any modern technology, but then people could not survive without it.

What is this chip going to be useful for? Musk and the others' idea is to build something like an additional layer of our brain that will link us and AI with other people.

Our brain comprises three layers, where the first one function at a more animal level (eating, sleeping, having sex, etc.), the second one performs more complex tasks typical of mammals, and the third one comprises the intellect that makes us genuinely human (mathematics, thinking, ethics, etc.). We naturally use our brain, of course, and we don't care about which layer has made whatever decision we make. Often we have some love problems that make it a fight for our brain and heart and there we get the fact that they made us of different components.

And the ultimate future?

Our brain goes faster than our body: it's like a CPU that learns that it's too damn slow to all the other PC components. For our brain, the body is a limitation that could unleash all its potential with a brain interface, as in a dream, as in VR. Why do we even need to have a body?

Futurama brain machine interfaces in Virtual Reality

that Futurama predicted: we could only survive with our heads (Image from Futurama Wikia)

The Matrix is the last stage of Complete Dive VR. With all our bodies, we could enter a simulation and see it as true existence, and we could do whatever we want inside this simulation. We may have infinite possibilities, provided that there are no rules to obey in a simulation. So it may also be more than The Matrix: people are just people in the actual world in The Matrix... why are we supposed to have this? We could fly, we could have six legs, as long as the brain + the AI could think of something, it could happen. Ultimately, without a headset, BCI can make VR possible... only by inserting feelings into our brains. VR could become a life of ours. Or our lives... as a machine, we could live separate lifetimes in parallel, who knows.

It all depends on this super-brain can handle what. And maybe we're going to think about living in love with each other, thanks to the smart AI that's going to organize us through our fourth brain stage, so we're going to avoid killing each other, like in all those years. It is all a mind-bending thought, but it may be humanity's final evolution. The paradox of Fermi says that we have not yet encountered aliens because they no longer need to exist in the physical world: they have strengthened so much that they live all the time in complete dive VR. This may as well be the case for us.

The article was written by Amit Caesar.

Here are some exciting new articles you don't want to miss!

- New wearable skin lets you touch VR stuff and really feel it.

- Apple Vision Pro: The Future of Spatial Computing

- Learn a New Language in Virtual Reality Without Leaving Your Home

- Meta Quest 3: Everything you need to know

- Amazing products for your VR from Amazon

- The Best Accessories for Microsoft Flight Simulator

- Smart Contact Lenses: The Next Frontier in Augmented Reality

- Experience the future of sex with virtual reality and artificial intelligence

More News:

- UNLEASHING NATURE'S POWER: HARNESSING THE BODY'S NATURAL IONS FOR WIRELESS DATA TRANSMISSION

- META QUEST 2 ACCESSORIES

- EVERYTHING YOU NEED TO KNOW ABOUT QUEST 3 VR, RAY-BAN SMART GLASSES AND META AI

- 14 VR Games That Are Still Worth Playing

Here are the links to my social media pages:

Facebook: [link]

YouTube: [link]

LinkedIn: [link]

Twitter (x): [link]